What do you think of when you hear the word ‘bot’? Nine times out of ten, your mind is probably drawn to the malicious botnets or bots that eat your ad budget and plague the digital advertising industry at large.

Various studies have shown that around 40% of internet traffic is now driven by bots, yet not all of those bots are created equal. Bots invariably receive a bad reputation, but knowing the difference between good and bad bots is essential.

Good bots often have many of the same characteristics as bad bots, and so for those in the online ad world, the challenge is threefold: how to detect a bot in the first place, how to avoid blocking good bots, and most importantly, how to prevent bad bots.

Here, we’ll go through the differences between good and bad bots, common signs your organization might be affected by bad bots, and the mechanisms used to detect them.

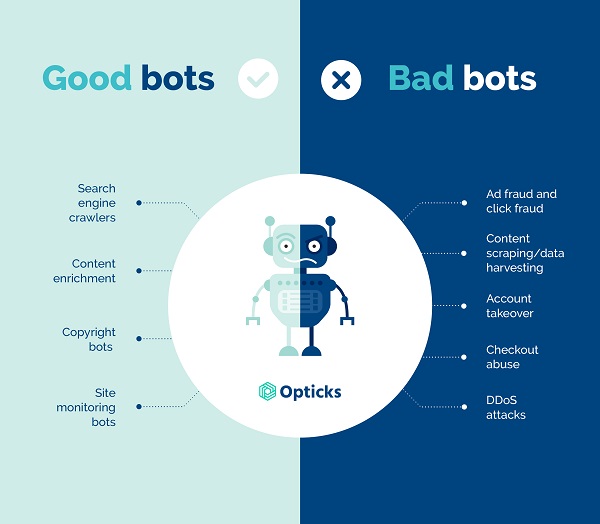

Know the difference between good bots and bad bots

Even though almost 25% of bot traffic came from malicious bots in 2019, not all bot traffic is bad traffic.

Knowing the difference between good and good bots will help you learn how to detect a bot — the kind you don’t want interacting with your ads.

Good bots versus bad bots

Good and bad bots operate in the same automated way, but for vastly different purposes. Good bots are used to perform helpful, useful, and legitimate tasks, while bad bots are used to perform malicious activities, such as ad fraud.

Good bot examples

Though they don’t receive the same amount of attention as bad bots, there are plenty of examples of good bots that the average web user isn’t even aware of.

- Search engine crawlers: Also known as web scraping, these bots crawl content on almost every website on the web, and then index it so that it shows up in search engine results for user searches.

- Content enrichment: As the name suggests, these bots enhance digital content. For example, the messaging platform Whatsapp uses bots to automatically enrich messages that contain a link by adding an image.

- Copyright bots: These analyze audio and video content and compare them against a database of content to identify copyright breaches.

- Site monitoring bots: These bots monitor websites to detect system outages and alert users of downtime or loading issues.

Bad bot examples

Many ad campaigns are ruined by the presence of bad bot traffic. Here are just a few examples of other ways that bad bots perform harmful activities.

- Ad fraud and click fraud: Though there are many types of ad fraud, one of the most common ways these bots operate is by repeatedly clicking on ads, or fraudulently claiming compensation for performance marketing events, such as conversions and overall draining of advertising budgets.

- Content scraping and data harvesting: Unlike the good web scrapers, these bots are designed to fraudulently scrape content and strategic data from other sources, such as price, product descriptions, and original content from websites.

- Account takeover: These bots can imitate user behavior and hide inside an already validated user session by running as malware. Subtypes of account takeovers include credential cracking, credential stuffing, fake account creation, and bonus abuse.

- Checkout abuse: These bots can be used to quickly purchase entire inventories of a product that’s in high demand, particularly those that have just been launched. Subtypes of checkout abuse include scalping, spinning, inventory exhaustion/hoarding, sniping, and discount abuse.

- DDoS attacks: DDoS (Distributed Denial of Service) attacks are used to take down websites by overloading them with queries and requests, rendering them unavailable to real users. These attacks are often carried out to weaken network security layers and enter them while the system is compromised.

Botnets are a more sophisticated type of bad bot. They are a collection of remote devices that are operated by remote threat actors. They can also perform multiple malicious activities such as ad fraud, identity theft, and DDoS attacks.

Bad bots getting badder: The four generations of bots

Bad bots are constantly evolving to become more sophisticated and simulate more human-like behavior than ever before. Now we know what a bad bot looks like, let’s explore the four generations of bots and the level of difficulty associated with detecting them.

First-generation bots

These are single-task bots that perform automated tasks such as website scraping and form filling. These types of bots are easy to defend against since they are created with very simple scripting tools.

Second-generation bots

These bots operate using “headless browser” modes and can bypass first-generation bot defenses. Second-generation bots can execute JavaScript and maintain cookies to automate the control of a website. They are usually used for DDoS attacks, web scraping, and ad fraud.

Third-generation bots

These bots look like browsers, are more sophisticated, and can imitate human-like functions such as mouse movements and keystrokes. However, they cannot replicate the “randomness” of real human behavior. Third-generation bots are used to DDoS attacks, identity theft, API abuse, and ad fraud.

Client-side defenses (we’ll explore what these are later in the article) are not sufficient enough to detect third-generation bots. A server-side approach is necessary to distinguish actual human behavior from simulated human behavior.

Fourth-generation bots

These are the newest iteration of bots, and the most difficult to detect. Fourth-generation bots can mimic the random behaviors and movements that humans make, such as nonlinear mouse movements.

Detecting these bots is more complicated as you’ll need to identify the smallest amounts of non-random movement, which will lead to large amounts of real traffic being identified as bots. Machine learning is usually needed to detect fourth-generation bots.

The 4 signs your organization is being affected by bots

So, if good bots and bad bots are so similar, how can you know if your organization has been targeted by bad bots?

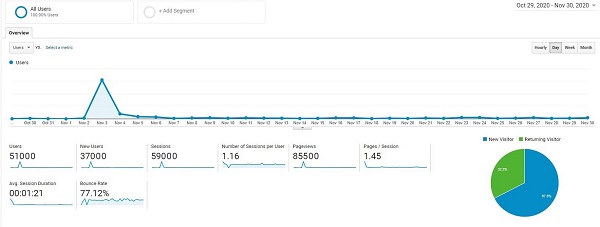

There are multiple common signs that your website traffic is being targeted and affected by bad bots, and they can all be identified using a tool as simple as Google Analytics. Once you know how to detect a bot, you can then begin the process of weeding the bad ones out.

Drastic spikes in traffic

Large spikes in traffic can be a sign of bad bot activity. Generally, site traffic usually grows gradually in-line with organic improvements such as better SERP rankings and better backlinks on high-ranking websites, rather than sudden upsurges. If you haven’t done anything different that validates a large spike in traffic, bad bots likely are to blame.

Unusually high bounce rates

An unexpected and unusually high bounce rate coupled with an upswing in new user sessions can be caused by a high level of bot traffic. Conversely, a steep drop in bounce rate can also indicate malicious bot activity.

Slow website and server performance

A slowdown in website and server performance can also be a sign of bad bot activity. This is due to your server being overloaded by a high amount of bot traffic.

Suspicious traffic sources

Traffic trends are an easy way to spot bad bots. You’ve likely been targeted by bad bots if you’re seeing large spikes in traffic coming from one of the following:

- Users in one specific geographical region (especially from a region that’s unlikely to have large numbers of users who speak the site’s native language)

- Very few unique users

- Large numbers of sessions from single IP addresses

How to detect a bot: 4 different mechanisms

Now for the really important part: how to detect a bot.

When first and second-generation bots were the only bots on the scene, it was common practice to simply block data center proxy IP addresses from which suspicious activity was detected. However, this method of preventing bad bot activity is no longer enough nor 100% accurate.

For example, bot developers have maneuvered around IP address-blocking by using Luminati, the world’s largest proxy service, to easily spin millions of bots via residential IP addresses. These IP addresses aren’t identified as bad bots.

So, what mechanisms are good enough to detect bots? A combination of both server-side and client-side to bot detection are common techniques used. Server-side modules collect HTTP requests, while client-side (in-browser) can record and analyze behavioral signals. Using this combination usually produces the highest accuracy of bot detection.

Let’s take a look at some of the mechanisms that can help distinguish a good bad from a bad bot:

Fingerprinting technology

Fingerprinting-based detection involves obtaining identifiable data — such as user agent, request headers, and IP addresses — from the browsers and devices used to access websites, in order to ascertain whether they carry any identifying information that indicates bad bot activity. These attributes are then cross-analyzed to determine whether they are consistent with one another.

Behavioral detection

This detection mechanism is focused on analyzing human-like behaviors and predicting whether the traffic source is genuine or a bot. As well as this, it’s also a useful mechanism for distinguishing bad bots from good bots.

Here are some of the common activities that are tracked using this detection mechanism:

- Scrolling behavior

- Keystrokes

- Mouse dynamics and movements (non-linear/randomized/patterned)

- Mouse clicks (identifying rhythms)

- Number of requests during a session

Statistical analysis

Statistical analysis involves using statistical resources (calculations on distributions, means, deviation, etc) to infer whether traffic behaves normally or contains strange patterns.

Analyzed variables include:

- Volume of traffic

- Conversion rates

- Distribution of fingerprints

For example, users can set configurations like so: if traffic from a certain source has two standard deviations in terms of conversion rate compared to the rest of traffic sources, it will be blocked.

Machine learning

Machine learning rapidly identifies reproduced patterns at a rate that’s impossible for humans to replicate. Patterns are extracted from datasets, and algorithms are applied to them to detect new patterns that could be attributed to bad bots in a much faster and more efficient way than humans can manage.

Make falling victim to bad bots impossible with a professional solution

As bots become more sophisticated, so should your approach to detecting and preventing them. Since not all bot activities are bad activities, you also need to know how to detect a bot in a way that doesn’t interrupt the good that bots can do.

For example, Googlebot, Google’s web crawler helps rank content for Google’s search engine results pages. If you block this bot unintentionally because Google switches IP addresses frequently, websites can lose out in search page rankings.

Determining a good bot from a bad is crucial, and detecting a bad bot even more so. The best course of action you can take is to invest in a specialist bot management solution that can identify and block bad bots using the techniques discussed in this article.

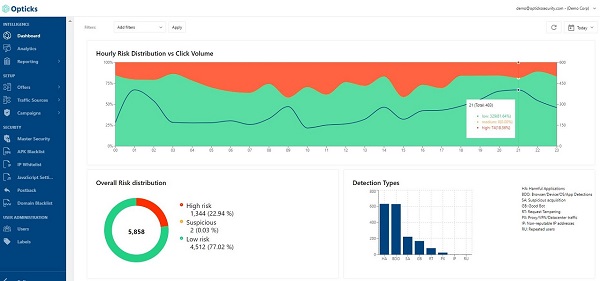

To find out more about how Opticks’ solution can apply its state-of-the-art machine-learning algorithms and expert rules to detect bad bots and protect your organization, click here to contact us request a free demo or start your free trial.